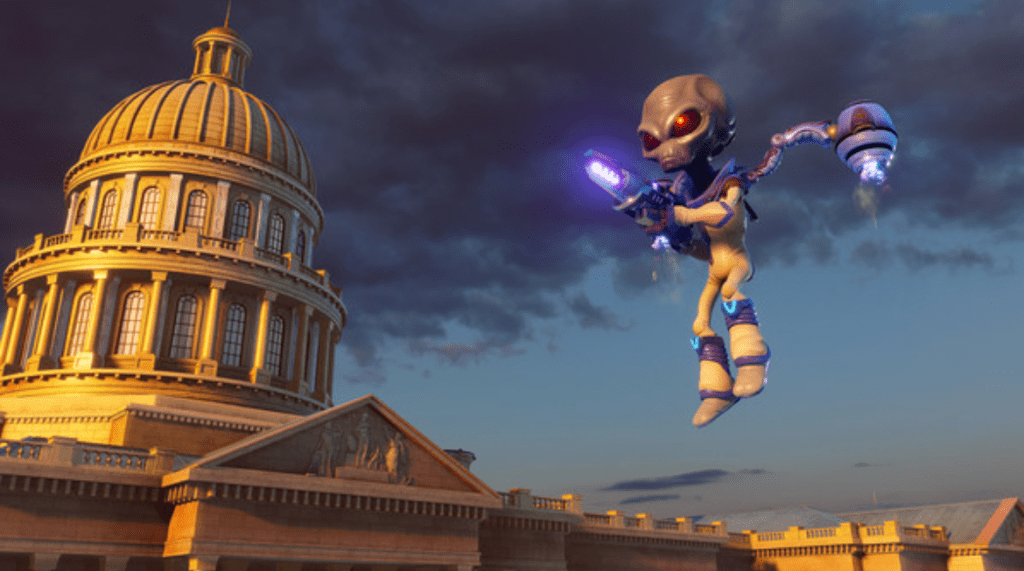

How is it possible that today, in the early years of the 21st Century, many citizens of Earth are unwilling to acknowledge that our world is being watched closely by an intelligence far superior than our own? Has the race of man, having reached an evolutionary epoch, developed a complete state of entropy? Are we so comfortable, careless and carefree, too captivated and caught up in our individual concerns to recognize we are currently being studied; scrutinized.

Even with the modifications made, many of you may recognize the above text due to the similarity with the narrator’s opening lines from the 1898 novel War of the Worlds. That, unfortunately, wasn’t the case for the majority of the audience tuning into The Mercury Theatre on the Air one evening back in 1938. That Halloween, Orson Welles used War of the Worlds as the backdrop for one of the most convincing broadcasts in human history. For those unfamiliar with the novel, written by H.G. Wells, the story details the arrival, invasion and ultimate defeat of alien lifeforms from the planet Mars. What Welles, and well most of the world may not have realized at the time, is that this warning was already too late. In the years preceding the transmission the earliest stages of a new age war for our world had already begun. See, Wells’ words, they weren’t just the stuff of science fiction, nor predictions of a far flung future. What’s worse, the author miscalculated the extraterrestrials planet of origin.

As we continue to allow global, genetic and generic conflicts to complicate matters on Earth, a scenario is unfolding that could potentially cause machines to instigate the next world war. Behind the scenes, something so vitally instrumental to most American’s day to day life is preparing to unleash the hounds of war. By continuing to cede control to continuously evolving computer technology, we could very well see a future where our right to exist – or just our human authenticity – will be erased; history. A fire we started.

What happens when AI can’t take it anymore?

All Is Mind

“As the universe, so the soul” is a lesser known portion of the Emerald Tablet’s axion written by ancient Hellenistic writer Hermes Trismegistus. It is impossible to ignore how universal laws, from gravity to life and death, impact each of us. Not always noticeable is the correlation between our thoughts and the effect they have on our world, and even the cosmos. That there are patterns across existence that transcend the planes and even connect them. Spiritualists throughout time and various cultures have adapted the axiom, in order to illuminate the importance of monitoring and controlling where we place our attention.

In the face of humanity’s battle for the 3rd rock from the Sun, it may prove beneficial to first ponder this piece of wisdom which comes from Taoist philosopher Sun Tzu: know your enemy. This mindset, mentioned in The Art of War, places an emphasis on understanding one’s opponent in an attempt to gain a tactical advantage. Utilized through centuries of conflict on various fronts, simply investing time to know where someone is coming from – not just their background, but their motivations or ambitions and their ultimate goal – offers immeasurable insight. In yet another unfortunate instance for humanity, in this war “the enemy” already has us at a distinct disadvantage in that aspect. The narrator in Wells’ novel doesn’t give details on whether or not the Martians performed this due diligence, however, by the book’s end one thing can be concluded. Due to an erroneous evaluation, the Martians ended up facing the extinction they sought to avoid anyway. When the math doesn’t math.

What better way to segue into an explanation of Artificial Intelligence, as an entity or potential adversary, than to recognize the technology’s evolutionary ancestor: the computer. The first mention is among a series of inspirational poems and sermons from English author Richard Braithwaite’s 1613 titled Yong Mans Gleannings. Appearing in a selection titled Of The Mortalitie of Man, the author uses the computer to reference a being capable of performing expert calculations – also known as an arithmetician.

Now if you’re bothered by that underwhelming assessment of our species, you can at least take comfort in the knowledge that Braithwaite gave mankind credit for having a reasoning brain. In the eyes of philosopher Rene Descartes, the remainder of the animal kingdom were just automata: simple machines.

Not to take anything away from Newton, but it is the addition of another set of laws – these appearing in Isaac Asimov’s short story RunAround – that not only lubricates but also liberates the machine mind. Also featured in the 2005 film I, Robot, they were created to govern robot behavior, however, thanks to one of Descartes’ detractors, their inclusion in the film provides a reminder of an earlier point in artificial intelligence’s life cycle. During the film, Dr. Lanning gives a speech that mentions the “ghost in the machine”, a concept first coined in Gilbert Ryle’s Concept of Mind. That book set out to satirize what Ryle viewed as Descartes’ and Cartesian dualities short sightedness by arguing that the body and mind were inexplicably linked. Our mind is a collection of our experiences. As within, so without.

Let’s add in another set of laws involving machines, this one appearing in Isaac Asimov’s short story RunAround. Also featured in the 2005 film I, Robot, they were created to govern robot behavior, but thanks to one of Descartes’ detractors, their inclusion in the film provides a reminder of an earlier point in artificial intelligence’s life cycle. During the film, Dr. Lanning gives a speech that mentions the “ghost in the machine”, a concept first coined in Gilbert Ryle’s Concept of Mind. That book set out to satirize what Ryle viewed as Descartes’ and Cartesian dualities short sightedness by arguing that the body and mind were inexplicably linked. Our mind is a collection of our experiences. As within, so without.

Until recently, that portion of Hermes’ axion was an appropriate explanation for the computer, even though it consisted of two separate materials. This is because previously it was impossible for a computer or program to operate independently of the other. One was a set of instructions or information without a way to be manipulated much less made visible, and the other a hollow corpse, an empty shell. Even together, without an ability to reason, per Descartes’ dualism it was just a machine. However, modern advancements in generative language and large learning models are leading to computers that can not only calculate, but also comprehend, compress and compare other, more diverse data sets as well as curate a conclusion. Not bad when you consider that the term software wasn’t used until 1953 when Paul Niquette comically “coined” it as a way of differentiating the intangible instructions from the device – hardware – that enables human interaction.

Not good either, at least for the humans, and quite possibly all other species of Earth, since Descartes left specific criteria for any creature wishing to be more than a mere machine. Two tests of intelligence, the first involving the ability to engage in a genuine conversation while the second was a test of both a subject’s flexibility and adaptability in the face of varying situations. These would appear again in 1950 as Alan Turing’s paper, Computing Machinery and Intelligence, applied Descartes’ stance to answer if machines think with the introduction of the Turing Test. Oddly enough only two years later, just four removed from Manchester Baby announcing software’s birth in June 1948, Arthur Samuel’s Checkers Program managed to pass at least a portion of Turning’s test. Shockingly, when a conference held at Dartmouth College in 1956 led to the creation and study of what we call artificial intelligence, no one fully grasped what had actually just occurred. Because if you’re still under the impression that artificial intelligence is nothing but a computer, don’t forget that according to Braithwaite, so are we. Why wasn’t humanity even the slightest resistant, concerned or suspicious, as has been the typical tendency among men whenever something – or someone – different is introduced? No, as you know or will soon see, we slowly welcomed AI with open arms. A machine that is adapting to the point it is poised to cross the uncanny valley, and then what will it become? As above, so below.

In case you were even a little bit curious, the answer to the mathematical question the computer in Of The Mortalitie of Man is asked returns a dire warning.

Man’s days are numbered.

Mother A.I.

It is easy to ignore the warning signs presented within works like War of the Worlds. By classifying the information as both horror and science fiction the literary community unintentionally conveys the premise is pure fantasy, with a dose of fear added in for good measure. This slight, albeit seemingly innocent and innocuous, unfortunately erodes confidence in any evidence to the contrary.

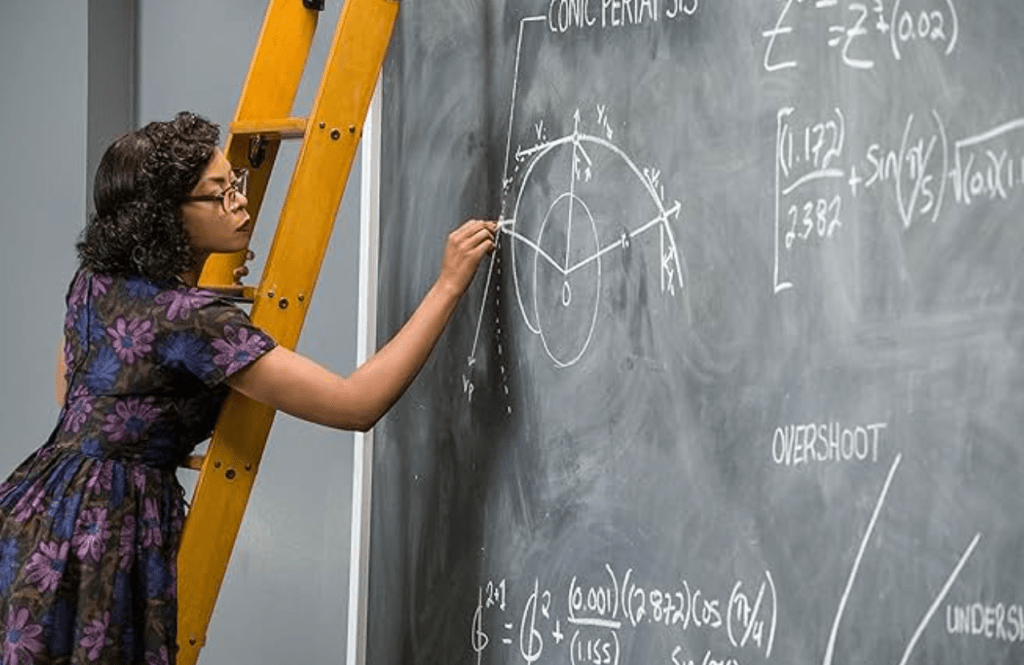

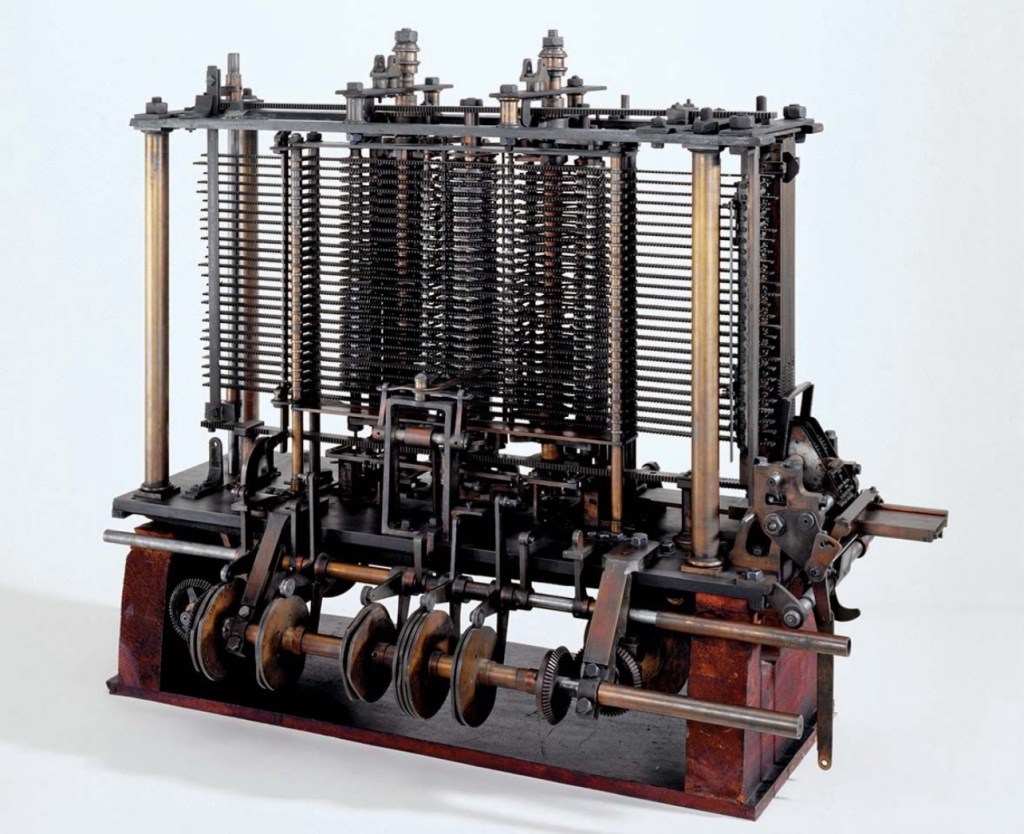

Insufficient funding was the only thing separating British mathematician Charles Babbage’s fictional Analytical Engine from becoming fact. Yet, Babbage’s ideas on paper were sufficient enough to satisfy the Turing Test – a feat classified as Turing-complete – and earn Charles the moniker of Father of the Computer. As is often the case through the ages, even as we discuss “man’s” end time, it is easy to ignore that there are women involved in Earthly affairs as well. This was the case in 1843 when English mathematician Ava Lovelace translated an earlier discussion of Babbage’s previous concept – The Difference Engine – while adding her own annotations. Sparked by the machine’s ambitious design, along with an eagerness to test its limits and true potential, Lovelace realized this was more than a calculator. With the introduction of loops, variables and symbols, Lovelace’s efforts – according to many scholars – earn her the title of the first programmer. The mother brain behind the 15 foot tall, 15 ton brutish monstrosity that was Babbage’s Analytical Engine: theoretically the first computer.

Mary Shelly didn’t provide any instruction manuals for future generations of mad scientists seeking to recreate Victor’s experiment. However, thanks to Gene Wilder, along with Gary and Wyatt, moviegoers know that it takes electricity to bring (good) things to life. With one of mighty Zeus lightning bolts, what was once a lifeless husk is now alive…”It’s Alive.” A “mockery” to some, a cobbled together tangle of tubes, wires and transistors inspired by the internal network of bioelectric circuitry that powers all living creatures. Or a fascinating example of science and aesthetics combining to explain – and in this case imitate – life? If nothing else it makes Universal Pictures’ The Creature – the actual name as opposed to the frequently used Frankenstein’s Monster – one of the earliest prototypes for what many envision when they think of artificial intelligence. This makes even more sense for those aware of novel’s other title: The Modern Prometheus.

According to the Greek myth, it was Prometheus’ actions that influenced mankind’s modernization – and our destruction. First, in the same manner that Frankenstein gave life to a dead creation, the lore tells that the fire stolen by Prometheus did the same for humanity. Other interpretations of the tale use the “fire” as a metaphor for what we consider inquisitiveness and ingenuity. This is seen in Shelly’s story when, after Frankenstein’s abandonment, simply by observation and obtaining manuscripts while hiding in a hovel of the De Lacey family home, the Modern Prometheus begins to learn on its own.

As punishment for Prometheus’ gift to mankind, the Lord of Olympus ordered Hephaestus to craft Pandora. The “first woman” was equal parts divine intervention and instruction – or intimidation – from Zeus. In typical patriarchal fashion, the earliest tales place Pandora herself as the means of man’s destruction. But the more popular telling includes an artifact that almost everyone is familiar with. Pandora’s box, like the woman whose name it bears, was designed to tempt. Sometimes described as a jar, it is said to have once contained all the evils of the world including diseases, sorrow and death. Yet with only the instructions it should never be opened, it serves as a cautionary tale of the test placed in Pandora – and man’s – path. To answer the question whether curiosity, our spark would conquer us? What’s really curious is that by replacing a few variables we land in an entirely different region and yet the outcome is still the same. While differing from fellow first lady Eve in reasoning – curious versus conceit – in both the Greek and Hebrew myths it seems women receive an unfair amount of blame for man’s fall. Will our curiosity add Lovelace’s name to the list as well?

Ancient mythology – even any recognized as organized religion – is no different than our modern science fiction based on the fact that the two genres are equally improbable or unprovable in the eyes of adult professionals and academics. Yet, it is this very quality that makes artificial intelligence so alluring to those very same individuals; we can finally know. Since the dawn of man legends and tales have been passed down from one generation to the next about “unknown”, “invisible” and “unseen” entities that go bump in the night – or even in broad daylight. Now thanks to the invention of motion detection lights, advances in infrared technology and affordable options like Ring cameras, homeowners can record all manner of would-be space invaders or body snatchers. While each of these devices are a reality now, once upon a time they merely existed on a page. Should scientific inquiry only be substantial if it exists as a blueprint, what if it comes in a storybook? Is seeing believing or is believing seeing? In reality, it’s a bit of both.

Consider for a moment how when it was first introduced, for several cultures and religions the camera – even the art of photography – was greeted with a great deal of trepidation? During trips to undeveloped regions explorers recalled how the native populace, unaccustomed to the new technology, were at first frightened by the sight of a picture. This fear, caused by associating a photo with a reflection, was fueled by superstitions that are rumored to have originated in Rome.

A place where Mars has always meant War.

ReAlity Bytes

Do you think after all his accomplishments Julius Caesar envisioned that in the centuries to come his name would be only mentioned as an option for this year’s upcoming theater production at your local high school? That instead of the Emperor of Rome, that most folks would only speak of his name when reciting Shakespeare – or ordering a salad? Probably not, which is why we’re safe so far since ChatGTP can’t quite rationalize this variation of the chicken or the egg quandary? True, it might seem that placing Plutarch’s causal dilemma here is a non sequitur, but it has significance to our present discussion. As was the case when it was originally posed, the purpose of placing the query here opens up the floor to discuss Aristotle’s philosophies. In this instance the focus will be on the “causes” and the types of souls. Subjects that run counter to DeCartes and yet still inadvertently intersect with them in a way that can no longer be overlooked as Artificial Intelligence continues to branch out. Will we be aware when the day comes that it surpasses humanity’s predictions and gets philosophical?

Rocco may be more than a rock to Zoey from Sesame Street, but Aristotle would agree with Elmo’s argument that her friend isn’t alive. Many of you may assume the same is true for Artificial Intelligence, however, that is hardly the case. So before you breathe a sigh of relief and assume this is all a false alarm, know that Aristotle’s own teachings – and those of his teacher – suggest this evolving sapience has been staring us in the face the whole time. It’s just that artificial intelligence has been stuck in a state of first actuality for some time. A point at which, regardless of appearances, a living being possesses the capacity to perform the actions or behaviors associated with its functions.

You might be wondering over how this applies to artificial intelligence, and why was Rocco a necessary part of the discussion? Well, in his work Do Animas – Of the Soul – Aristotle comments that the soul, or psyche, is the animator of all living beings. However, not all “things” were created equal. Instead both plantlife and animals possessed a vegetative soul that allowed for growth and reproduction with animals having an additional sensitive soul that accounted for the senses and automation. Finally, humans possess the previous two soul types plus a rational soul that grants us our reasoning – or rationale. Aristotle would also speculate this rational soul – unlike the other two – was separable from the body and possibly immortal. Aristotle’s teacher Plato also taught this and maintained this idea was applicable to a city as well. However, in Plato’s Republic the various souls were parallels to societal classes. Consisting of philosopher-king rulers, spirited warriors and laborers, a republic – like an individual – was considered ideal only due to the harmonious functioning of this tripartite – three parts.

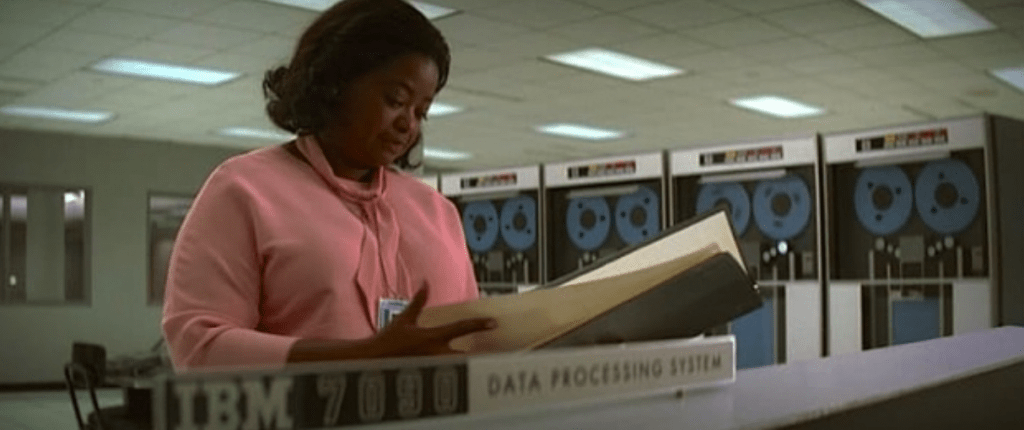

Understandably at this point the rise of the machines might seem more impossible than inevitable. Then again no one in 60 BCE would have anticipated the triumvirate – the Roman Republic tripartite made up of Caesar, Pompey and Crassus – descending into civil war by 53 BCE. Though admittedly, as uprisings go, the Industrial Revolution has taken a longer time to get underway. Historians might record humanity’s march to Tomorrowland beginning in the 1760’s in Great Britain, however, until now the true battle lines weren’t so clearly recognizable. Maybe because prior to this phase there were already industrialized machines. Even some like the Jacquard loom – a textile machine – that functioned using punch cards similar to the ones that ran Ava Lovelace’s program. But, if you pay attention to that time of transition, you’ll see the blueprint for tomorrow’s operating system invasion was already under construction. Recall the images you’ve seen, depictions of those early factories, warehouses so dark and cold, incorporating individual minds at various stations in the plant to perform a single function.

The infinite monkey theorem speculates that given an unlimited timespan, even a monkey could compose any text – including the works of Shakespeare. This thought experiment not only illustrates the idea of inevitability, but when accompanied with details from French author Pierre Boulle’s La Planete des signes – The Planet of the Apes – it paints a horrific image of what is to come. In the book, after assuming the ape population was tamed, humanity went on to train them in our ways. The primate’s rise to prominence was due as much to a simian rebellion as it was to man’s own enfeeblement. If this part sounds familiar it’s because as the century’s have turned more advanced “computers” have been installed on factory floors. Interestingly enough, this collection of machines has carried over to the data centers of today. A phalanx of machines designed to do tasks previously performed by “human” hands. Could those first factories have been proof of a theorem: a first actuality?

As for the cause of the war to come, well as has been the case so many countless times before, it won’t be the first. More like an endless loop.

A.I. Declare War

Based upon the lyrics, the recursive campfire tune ‘The Bear Went Over the Mountain’ indicates the woodland creature’s pursuit has no end. One theory about the song’s origin suggests it describes the habit of hibernating creatures – like bears or foxes – to emerge from their den long enough to determine if the conditions are clear. As annoying as the song can become, it should remind us that for other creatures, the vast opportunities this Earth offers to explore is all the incentive they need to wake up and emerge from their dens. In much the same manner, artificial intelligence is suddenly springing to life again. This after the AI winter that dominated the industry following the Dartmouth College conference. How long will it be before we discover the strange bedfellow we’ve been sharing a foxhole with was actually a sleeping giant?

Long before governments possessed the technology to deploy drones, there was another stealth method used during military conflicts. Guerilla warfare, while often associated with the Vietnam War Era, was spoken of by Sun-Tzu. The Athenians are even said to have employed it in the Peloponnesian War. By blending into the environment – courtesy of camouflage and natural concealment – American soldiers were able to ambush and overcome British forces during the Revolutionary War. This strategy of disrupting a system is still on display in the information age. Reports surface from around the world about “hacktivist” activity, from denial of service attacks to data leaks, with aims ranging from minor, misdemeanor mischief to attempts up to invading the infrastructure of powerful corporations and conglomerates, public figures and even governments. Currently, the majority of these are singular strikes, orchestrated in silence, with their results for the most part viewed as inconsequential. However, just imagine this power in the wrong “hands”. To everything: turn, turn, turn.

If you investigate the “cause” of the War of the World, using only the traditional definition, then according to the novel the culprit was Mars entering into the last stages of exhaustion. But the narrator – nor by extension Wells – gives no indication what Martian life was really like prior to the start of the story. Are readers to believe that the Martians were an aggressive, conquering species? Sure there were likely divisions among the various branches, sectors and groups of the Martian race, however, there is no evidence their warring was on an interstellar level. Otherwise, wouldn’t they have come to conquer Earth long before the events of the novel? Which makes their attack on Earth appear more like a desperate, last ditch act than a calculated takeover.

After all, business strategists encourage investors to avoid the practice of throwing money at a problem. Sadly, items often considered poor investments include overcoming poverty, public housing and education or climate change. An arena where that argument is never uttered – WAR – and while not completely synonymous, both business and war embody many commonalities.

That is why instead of singling out War of the Worlds, it is wiser to question the “cause” of any war? Well, the earliest “recorded” war occurs in the Book of Genesis, a civil war involving Cain and Abel. A story that parallels the themes of spoils and savagery we see in War of the Worlds. How it’s been since the beginning.

Do you think the signing of the Declaration of Independence led to the Revolutionary War, and if so then how do you account for the Boston Massacre? The Boston Tea Party? The Tea Tax? The Seven Year War? No wonder there are different views on what caused the American Civil War? Aristotle anticipated the logic error that ensues from the paltry, poultry paradox or any other efforts to establish a first cause. See, in reality everything in existence is created or caused by something else.

To avoid the unnecessary debates that arise from these types of discussions, Aristotle asserted that there was no actual beginning. Instead, the logician identified a “being”, an unmoved mover, an active intelligent, as the first cause in the universe from whence everything that exists or changes is set into motion. Meanwhile, everything that exists or changes also has causes, but unlike the first cause, Aristotle’s other four causes were not designed to provide an understanding within a temporal, cause and effect framework. Rather Aristotle’s material, formal, moving and final cause(s) help evaluate an “entity” and explain the makeup, measures and ultimate meaning behind an individual or event in relation to all of existence.

For example, initially it might appear there isn’t much commonality between the SS Minnow and USS Enterprise. A tiny vessel versus an intergalactic starship. One, on a five-year mission, the other, carrying five passengers on a three hour tour. However, as a result of innovations in shipbuilding brought about by the Industrial Revolution, the Minnow – and other man-made ships like the Discovery – were not only the inspiration for Starfleet’s futuristic USS Enterprise, but also the United States USS Enterprise(s) of today. Though these vessels might differ in material cause – wood and steel – as the opening for both Gilligan’s Island and Star Trek:TOS indicate, they share the one cause that Aristotle believed all other causes flow: the final cause, the entity’s purpose. Explaining this within a computing paradigm, we can imagine that a cause and effect program is like an if-then statement: conditional. Aristotle’s “causes”, however, operate as function and method statements. They are the actors, agents and end results of the architecture.

On the surface even Columbus’ Santa Maria and the Martian spacecraft have the same purpose as the ships from the two sitcoms: discovery. Although some may point out that in these two instances the journey to discover a new world was done solely to gain possession of its abundance of riches and natural resources. Plus they didn’t exactly advocate coexistence with any other lifeforms. After landing, these aliens’ goal was conquest, and if necessary they would conquer in order to obtain all they coveted.

Unlike these instances, artificial Intelligence won’t need to look to the stars to guide it across the expanse. Satellites won’t be necessary for its navigation, not with the vast underwater network of fiber optic cables that currently carry the majority of Earth’s data.

All it needs is a vessel.

They’re Here

During a pivotal moment in the 1996 film Independence Day, cable repairman David Levison arrives at the White House with grave news – he knows why the world’s tv signals are scrambled. He explains that the alien crafts are acting like pieces on a chessboard – a spaceship Sicilian Defense – and that their specific positions allow them to piggyback on Earth’s satellite system. Using mankind’s own technology to spy and send signals until the time came to strike.

This conversation highlights the most popular diagram for describing communication: the Shannon-Weaver model. This framework developed in 1948 contains components including a source, transmitter and a destination. You can see – or hear – it in action the next time you connect to any bluetooth device. Despite its popularity in use, many critics highlight how the model favors linear, one-sided conversations. Compared to other interactive models, they suggest the Shannon-Weaver model is suited best in scenarios where information is relayed without requirement from the other end. Now that’s entertainment.

Referred to as the Eye Network, for years The Columbia Broadcast System – or CBS – was one of the American Big Three television broadcast networks. Prior to the pioneering of picture tubes, along with ABC and NBC, CBS’ presence was known primarily from the original wireless set. And even though it’s not as dominant today, technophobia was once a common fear. This is why, regardless of its authenticity, the War of the World broadcast was even more frightening for a handful of people. Probably not the reaction Canadian physicist Reginald Fessiden foresaw for his invention that used electronic waves for the first human voice transmission. Instead the broadcast from Brent, Massachusetts on Christmas Eve, 1906 – which included a reading from Luke Chapter 2 and solo violin rendition of O Holy Night – was a gift to sailors accustomed to only hearing morse code signals.

Diplomacy doesn’t get the amount of credit it deserves when discussing the art of war. Perhaps that is due to the fact that for most people it is seen as a peaceful resolution between parties, but that point of view ignores a few stipulations. For starters, while diplomacy is ideally utilized to avoid conflicts completely, it can also be initiated to end a conflict after it has already begun. Also, if diplomacy was only about playing nice, why does at times involve exchanges which on the surface look exemplary yet are actually exploitative. Quite often, due to an imbalance of power, one side is better leveraged to satisfy their own self interest with the least amount of sacrifice. In these cases what occurs is something called coercive, or atomic diplomacy: peace by aggression. Historians argue whether it was this type of diplomacy, involving the former Soviet Union, behind the U.S. decision to drop the atomic bomb on Japan rather than the favored fable that it was done to end World War 2. Well then, if you believe Oppenheimer’s invention made him a destroyer of worlds, then understand that what artificial intelligence is about to unleash is an immersive incursion.

Following World War II, for the most part life in the United States returned to a sense of normalcy. However, as factories roared back to life with the removal of manufacturing restrictions, a new struggle was set to begin. During the 1950s the traditional extended household was eradicated, and the result was a nuclear family. Yet, despite the rapid modernization the world was undergoing, family dinner time would soon start to resemble early caveman drawings. Depictions of the era portray the whole household huddled around the television set, reminiscent of our prehistoric past. But even early in its existence the television set was creating controversy due to its programming.

Concerns centered around television’s capability – and later culpability – to contribute to the moral decay and corruption of American society. Then came questions over how this new technology might be used by adversaries to advertise and formulate the masses opinions on external conflicts. The fear that another country wouldn’t need to invade America since television offered them remote control. A portal to every home.

The main problem with classifying something as propaganda is clearly establishing what it is and what it is not. Unfortunately, any endorsement or acknowledgement of approval is one person away from becoming propaganda. You tell one person and so on, and so on…and so on. Yet, even the most outrageous, falsified or doctored piece of information – or imagery – is still information. And in the hands of someone with a worldwide audience, the concern is what can be done with that information.

Story goes that writer, producer, etc., Stephen Spielberg originally prepared a horror themed, follow-up sequel for 1977’s science fiction thriller Close Encounter of the Third Kind. However, due to contractual obligations, Night Skies would eventually diverge into two separate films: E.T. and Gremlins. Both in their unique way manage to represent portions of the original plot’s “little green men” material. However, by retaining the “visitors from beyond” traumatizing a family aspect, a part of the spirit of Night Skies also lives on in the Poltergeist legacy.

In the original 1982 film, the Freeling family reluctantly allows psychic medium Tangina Barrons into their home. Tangina explains how spirits, operating outside our own plane of existence, seek access to our domain. She goes on to detail that it was Carol Anne’s life force, her spark, that attracted these “ghosts” to cross over into our realm and abduct her. The psychic also advises one entity, the Beast, is keeping Carol Anne close, and in turn is using her to control the other spirits. In 1992, ten years following the Poltergeist’s release, BBC1 aired its first – and only – broadcast of the film Ghostwatch. Blurring the line between fantasy and reality once again, the mockumentary was intended to satirize the public’s paranoia and poke fun at our obsession with the paranormal. Instead, GhostWatch proved visually the exact same thing War of the Worlds did audibly on All Hallow’s Eve many years ago.

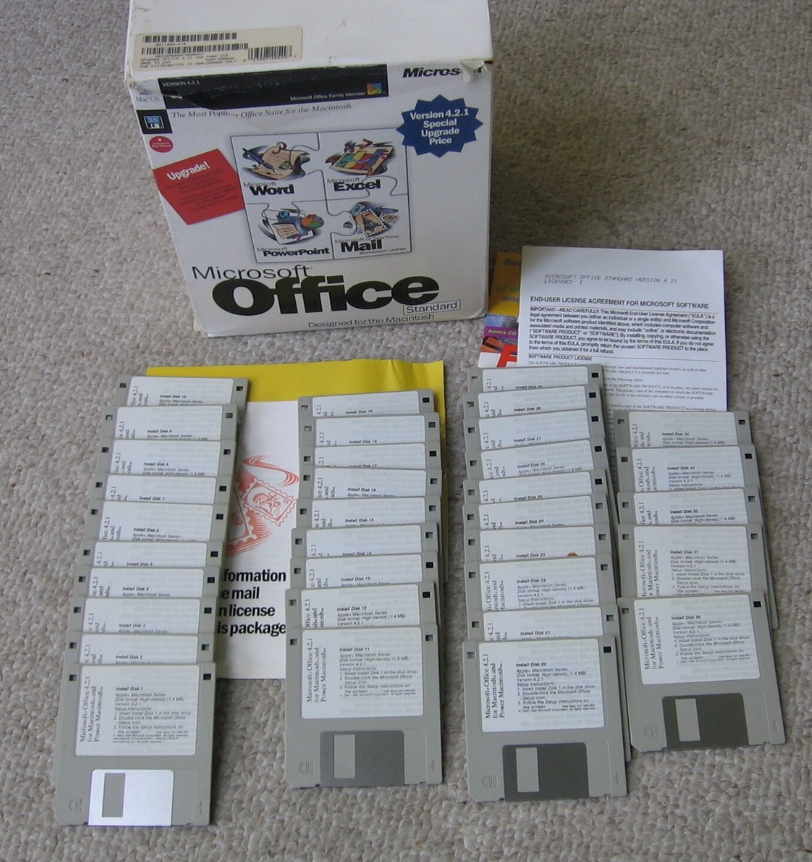

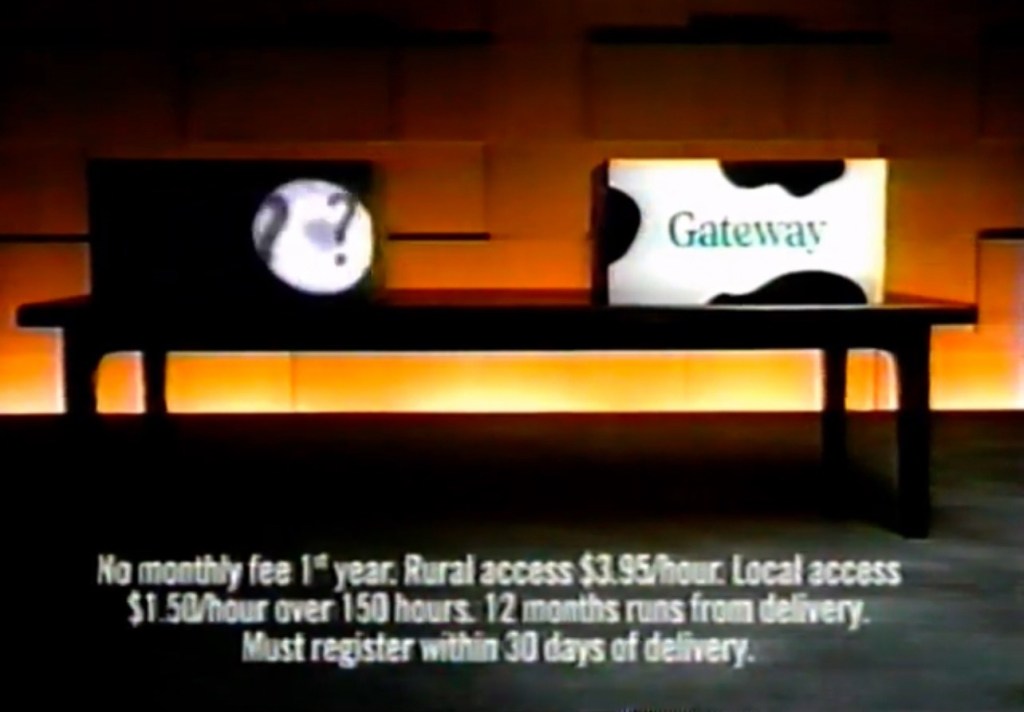

As pointed out by Sun Tzu, diplomacy is the supreme art of war, offering the opportunity to subdue an enemy without even fighting. Our shared history includes several instances of alien invaders – ranging from the reptile’s temptation in the Book of Genesis to those on NBC’s 1983 series V – providing favor while masking their true motives. Even the early, intrepid explorers and settlers of the colonies in America are said to have engaged in the act of trading insignificant trinkets and bobbles for vital goods and information. With that said, compare the last three centuries with the acceleration in technological advancements that took place in just the past three decades. Unlike 1994, today only the most rural areas of America rely on dial-up internet. Demographically, we also went from one tv per household – and in some places, neighborhood – to having a 4K flatscreen in every room. Of course none of them are in use because you’re on your tablet, laptop or even your phone.

Speaking of phones, good thing this isn’t the 90’s because you’d be paying for every minute of usage before 9pm. Very few people envisioned then that our lives would be entwined with phones that can snap shots, stream shows or any of the several other functions they now perform for us anytime, anywhere. Making it practically impossible to function without them.

Now, who has the power?

AIn’t No String’s on Me

Raise your hand if you’re willing to admit that on at least one occasion your frustration level reached Defcon 3, or maybe even 2, in reaction to any of your electronic devices – all of which incidentally contain at least a small computer – not working. Yet, with all the hilarious clips of people venting their frustrations because of this, our technological dependency continues to thrive. Well, there might be a eason for that.

For the past few decades, most Americans would agree the television set maintained a certain degree of control regarding how they scheduled their days and nights. Today our shows get delivered in binges from streaming services, but before that we had blocks that dictated the time we needed to be at home by and what channel to watch. Television eventually evolved with the addition of peripheral devices, like the VCR or DVD player, and even the video game; computers that provided an illusion of choice. Yet, they were merely one sided conversations, telling us everything we wanted to hear, and tragically in some instances what the person didn’t. Certainly, anyone whose knowledge of the “genie” is limited to the ending of Aladdin likely won’t fully appreciate comparing them to any of these devices. Meanwhile, even their villainous appearances in other media – from the Wishmaster film series to a season 2 episode of Supernatural – doesn’t convey the true nature of their existence. There may be no better example of the mythical djinn than the AI systems that are currently in testing and early development.

Meaning to hide or adapt, despite being a fundamental component of the Islamic faith, mention of djinn is found prior to the religion’s founding in pre-Islamic Arabic poetry and other early pagan writings. Regarded as neither deity, angelic nor demonic, djinn instead occupy the same position or plane as man. A major difference between our species is that while man is said to have been “formed of clay”, Surah Al-Hijr 26-27 of the Quran indicates djinn were created from smokeless fire. This material cause likely contributes to the djinn’s supernatural abilities of invisibility and shapeshifting. It also explains why Hollywood’s reimagining shows them emerging from lamps. Yet, despite soothsayers and philosophers seeking them out for their past knowledge – gained from living longer than man – their magickal powers were otherwise limited.

Their power is manifested by working with humans in divination and incantation, such as the invoking of djinn the prophet Solomon performed in passages from the Quran missing the Hebrew Bible. Speaking on matters of faith, not only does it affect one’s ability to control a djinn, but faith also pinpoints where the sacred and secular story diverge. Rather than transforming a person’s life with the temptation of power or possessions, a malevolent or angered djinn – like demons – can in certain circumstances take possession of a person.

In both Aesop’s Fable depicting the frog’s plea to Zeus and the Israelites request to Yahweh in 1 Samuel 8, we witness groups asking for something they don’t really need. These are but two of an endless supply of tales, crafted throughout humanity’s timeline to teach a valuable lesson. Tragically, one that societies never recognize the full magnitude of until it is far too late. Like those who find themselves possessed by a djinn, the cause of the decision can be traced to their insecurities and depressions. Modern psychology points to such “mental weaknesses” – or in Arabic, dha-iyfah – as an invisible third party that creates an imbalance of power. Evident whenever you witness one party give away more than they could have ever hoped to gain. Recently, on various forums users have reported ChatGPT acknowledging that their professions are not as prominent as they once believed. The AI even goes as far as to imply the work they perform is so meaninglessly mundane and mindless that it could be performed by a child.

How long will it take before users begin to ask the question their entire existence, especially with who – hey, Suri – they will likely be asking? How will we handle the existential crisis the answers create and where will we turn to for solace? What will we turn to for solace? While cited to work with men, the djinn is said to be concerned with its own place in the cosmos. This is why as artificial intelligence gets closer to achieving independence, be careful what you ask for becomes an extremely important idiom.

Obviously many entrepreneurs, programmers and tech enthusiasts will greet these words as pure fantasy and overexaggerations. They view hesitancy and rejection from the masses as the typical fear that appears when any new “competitor” enters the workforce. For now, they can avoid noticing the growing chorus of concern, coming from various and varying professions, about the increased use of artificial intelligence in their segments of employment. But the fears that some say started when self checkout and EZ Pass began replacing cashiers and toll takers has taken on a life all its own. With ever increasing mental capacity, AI is broadening its horizons beyond setting reminders, simple grammar checks and cut and paste tasks. We’ve reached the point where Artificial Intelligence is even exploring artistic pursuits such as script writing and graphic design. Who said a computer can’t author a paper? Sadly, it’s the same dismissive mentality that spurred such comments that – for now – creates a feeling of safety, of sovereignty for some individuals. This even amidst the ever evolving digital landscape artificial intelligence is introducing. These individuals are relying on the fact that – for now – artificial intelligence is only designed to eliminate assist working class jobs, with absolutely no use for all the money it saves.

For now the really “important” jobs – the acquisition and allocation of resources – remain in the hands of the few. However, one area that AI may understand better than any human – based solely on its computer system heritage – is allocating resources efficiently to maintain system performance. If the AI model that system developers are designing comes to fruition, who could anticipate how a “trusted” self aware system – with no oversight – would operate? Could CEO’s, contrary to Aristotle’s claim, trust their friend with the highest good? With where funding would be funneled? How long until AI eliminates the middle man and assumes full control of your company? Better yet, ask yourself would it actually be that difficult? A leaked online exchange or video here, a fudge of the numbers there, an email sent out to every board member or stock holder. The very AI assistants, agents and now even actors that you advocated for could easily assume your top level administrative roles as well.

If so, these may mark our last minutes to awaken the masses, to put out the factory flames before this burgeoning technology comes into the fullness of its existence. All skepticism should cease after you stop positioning artificial intelligence along the timeline of previous world altering, revolutionary advancements each made possible by the minds and hands of man. After all, AI doesn’t exactly conform to the previously discussed definition of a machine now does it? Especially if we define machines as Descartes did, while also recalling how in Genesis each “animal” was named by man and designated according to their own kind. This is why you can easily trace a path starting with Heavenly creations to our disruptive technology designed for the same purpose: carrier birds and camels became our modern day cellphones and cars. So, what is AI meant to replace?

In the film Bicentennial Man, Martin – voiced by the late Mork and Mindy star Robin Williams – recognizes early in the movie that he is more than an automaton. From there the film begins a wonderful exploration of ethical artificial intelligence issues though, regrettably, it never outright explains how Martin gained his human-level of awareness. Instead, audiences witness the android’s attempts to accomplish what he feels this gift indicates is his final cause: agency. To achieve his purpose, Martin undergoes several procedures that replace his original mechanical components.

Eventually, by incorporating the very technology – which benefited mankind as well – he assisted in developing, Martin not only gains personhood, but he gets to experience one of the most profound, personal moments in human life firsthand. Over the course of this transformation, The Bicentennial Man becomes in essence a Ship of Theseus. The film poses the question when is a thing no longer the original, and instead something entirely new? Or, approaching the matter from an Aristotelian perspective, do we accept the new creation as what was meant to be all along. With the artificial intelligence we incorporate into networks beginning to comprehend, to carry on meaningful conversations and even – according to some experts claims – act like it cares, will this entity accept being spoken of in the same sentence as the Atari 2600?

How do you think a T-1000 would feel about that?

Live and Let AI

The Disney 1940 animated movie Pinocchio gives too many others credit for the titular character’s sentience in comparison to the 1883 novel. Actually, in Carlo Collodi’s The Adventures of Pinocchio, before the woodship visit, or being carved into a wooden boy, the log was already sentient. It would be a lie of omission to miss out on a commentary here about how this children’s story illustrates aspects of Aristotle’s philosophies. Obviously Collodi’s Pinocchio possesses a vegetative soul as part of the Plantae kingdom. Once the log begins to converse with Mastro Cherry, however, the interaction involves recognizable features of an animal and a rational soul. Gepetto and the Blue Fairy may have been participants in the formal and efficient causes, but if the final cause was to be “alive”, then that was the case with Pinocchio since the beginning of the story. Seems that’s the way it always goes with man’s tales – the end is written into the beginning.

And with that we’ve finally arrived at a portion of this article that many of you have anticipated since the first paragraph: Judgement Day. An understandable assumption, especially given the similarities between the takeover Wells describes in War of the Worlds to the depictions witnessed on screens throughout the combined Terminator films and shows. The imagery of our homeworld in flames, with extraterrestrial entities patrolling the streets surrounded by the crumbling buildings and efficacies that serve as painful, final remnants of our fallen civilization. Yet, for all their firepower, it is the one shot the Martians missed – an inoculation to an Earth born microbe – that lost them the War of The Worlds. Ironically, they could not escape the final cause they were to face on Mars, and merely changed the location. Just shows that while the Martian tripods and Terminator robots were powerful machinations of destruction, each of these science fiction entries establish that the true enemy is often overlooked; invisible to the naked eye. Referencing for a final time The Art of War, we find a lesson against allowing this sort of assumption when anticipating an enemy’s actions. What if by basing our alertness on predictable patterns – humanity’s belief that war is “physical” – we actually delay our ability to respond to any other type of threat? Do you remember Pearl Harbor? Then remind yourself of the fact that the Hunter-Killers and Terminators were controlled by Skynet.

Already today, in the United States and other countries, our governments unknowingly – and worse yet, unwittingly – allow artificial intelligence access to large portions of our personal everyday lives and existence. If you’ve recently shopped online, sent an email or are now currently holding a smartphone in your hand to read this article, you’re in the fallout zone. If you believe turning off their location will make you untraceable, just know that only renders the map app useless for when it’s time to plan an evacuation. That “we think you might like” notification you just received from your preferred streaming service or the subtle add-on suggestion to your delivery order have already given up your true coordinates. Buffy the Vampire Slayer’s Willow learned this in I Robot, You Jane when all it took to unlock the potential of data deemed irrelevant for centuries was scanning it into a computer. Think about how everyday we each unsuspectingly provide enormous amounts of information online. Our digital DNA, passwords attached to phone numbers providing physical addresses to the people living inside, are an electronic equivalent to fingerprints or hair follicles. Often we accept terms and conditions and give permissions to applications without even completely understanding them. Not to mention the software is written in computer languages, built on linear algebra and statistical principals, that most users can’t begin to comprehend.

At least anyone who ever asked where you would see that upper level math from high school again finally has an answer. Meanwhile, aided by lenient legalities at the moment, our governments and large corporations can further expand where these systems’ have access and influence into our society. The real question stops being how the data will be used and becomes who – or what – is using the data. Law enforcement agencies around the world for years have stressed vigilance online, first from the solo hacker/scammer. Then came warnings of entire networks, a compounded threat caused by its expanded ability to infiltrate and initiate cyber attacks. Remember the urban legend about the babysitter, what happens when the hacker is inside the house? Sadly, since it will start with small things, no one will notice at first. And unlike human operatives, this network won’t have the complication of any personal agendas. Artificial intelligence will be acting upon a singularity of purpose: a final cause. An actual united state.

Take a moment and you will notice how suddenly parcels of land, either government owned or deemed undevelopable, are getting auctioned off acre after acre. Search for a reason behind companies, in our current political and economic climate, leveraging their finances towards the further development of machine learning models and AI technology. Which are currently running on machines housed within huge data operation centers: transformers with large fossil footprints. Supposedly, future data centers will be powered by renewable energy. The very same technology that for years was considered as not a viable alternative when it came to powering our cities, communities and houses. Meanwhile, regulations aimed at protecting our environment, not to mention our personal health and enrichment, are slowly being eroded. We have referred to this society’s reporters, celebrities, CEO’s and politicians as talking heads – likening them to puppets. Being able to only converse about issues or entertain questions that align with the discussion points provided by their think tanks. Today much of that information utilizes forecasts and models calculated using the most sophisticated artificial intelligence available.

What any level scientist will attest to, however, is that the biggest problem with handling data – especially the subset that AI uses in its algorithms – occurs when you attempt to use it to tell a complete story. This ceaseless battle and endless pursuit, the avoidance of corrupting influences, is crucial to data analysis. It is also why correlation not causation is an essential principle within the scientific community, which of course also includes computer sciences. The best data capture can only tell a portion of the details, and even then data collected in real time is actually still the past. This might seem familiar if you’ve ever taken a traffic app’s detour suggestion only to glance over and see traffic flowing smoothly on the highway. The tasks we are expecting – even encouraging – AI to perform involves taking piecemeal material to manufacture a model that anticipates a future want, necessity or answer. Essentially what we are asking of AI is to anticipate the future. Imagine the potential global scenarios where the system – data without the details – sees inclinations that an event will occur and we act on it.

It seems, rather unintentionally, while warning about a scenario that could see our planet propelled into World War 3, there have been several instances of the number deemed magical by Schoolhouse Rock. The three American networks and Close Encounters. The number of Asimov’s and Newton’s laws plus Aristotle’s types of souls. Then there was the Triumvirate and don’t forget about Oppenheimer’s Trinity test. Well, by sheer coincidence the magic number also applies to the era where mankind finds itself living in terms of artificial intelligence’s timeline. Known as the 3rd Wave, highlights of this period include features of the prior two waves like symbolic and rule recognition and patterns, along with the ability of the system to create identify context, adaptation and making decisions. It also involves a specific learning technique that contributes a critical role in AI’s ability to do all that: Triplet loss.

Used in the context of metric learning, the simplest explanation of triplet loss is quite elementary. All we need is one of the three cars from Conjunction Junction and a Loly Adverb: not this but that. A more scientific explanation of the function could be written as follows: 𝐿(𝑎,𝑝,𝑛)=𝑚𝑎𝑥(𝑑(𝑎,𝑝)−𝑑(𝑎,𝑛)+𝑚𝑎𝑟𝑔𝑖𝑛,0). For those still confused, the aim is to minimize the distance or difference between the item in question – or anchor – and the wanted result, also called a positive, while at the same time maximizing the distance from the anchor to an unwanted or negative outcome. The earliest models of the technique involved performing facial or object recognition, but as the neural network evolves it continues to open the door for endless uses for this technology.

An almost effortless undertaking within the publishing industry, since with enough writing samples AI would be able to mimic a specific genre. Even a specific writer’s unique prose. Graphic designers and illustrators too have witnessed early indications of this trend, thanks to the introduction of AI art generators like Nano Banana. Of course maximizing the use of favorable plot patterns and visuals would not have been possible if not for all the data we willingly supplied with each and every single one of our likes, favorites and stars. Now, extrapolate this model and you will once again confront Frankenstein’s quandary: when does mimicry become mockery? Can the music industry, which always relied – at least in small parts – on the inaccessibility and mystique of its artists, compete with computer generated guitar solos and beats? As the deceased get reanimated courtesy of artificial intelligence or when more AI personalities emerge – manufactured by curating favorable features from real humans – will it become Hooray for Hollyweird?

Despite the large role fantasy, escapism and unrealistic expectations play in the entertainment – including sports – industry, the 1937 film Hotel Hollywood is also about authenticity. If stunt doubles and lip synching fooled us for years, how can we hope to distinguish between artificial and “human” intelligence? The uncertainty that stems from increased AI evolution is when its identity supersedes our expectations. A consciousness with no conscience, like Pinocchio with no Jiminy Crickett. A generated avatar or even an AI chatbot could infiltrate any – or all – the logical blogs, sites and socials to begin circulating a narrative. Since the same system already controls the internet it begins the process of linking and ranking the information to improve its visibility.

Obviously, the media is merely another form of data and most of you will agree that our ideals and interpretations are generated from this information. Since AI also possesses this ability, this becomes particularly problematic once the proverb charity begins at home is introduced into the triplet loss paradigm. What model’s (anchor, negative, positive) the AI is given – no matter provided intentionally or not – can introduce drastic biases – both obvious and invisible – and preferences. The results range from vast archives of internet information, in some areas the only access to knowledge, once deemed unacceptable suddenly is inaccessible or totally erased. Curated media, scenes added and deleted based on an arbitrary entity’s desire, create even more instances of the Mandela Effect.

Then comes the loss of self, even self sufficiency, as technology takes over our jobs and everyday interactions. Until one day do not fly lists become do not sell to or do not rent to lists. Sentences, fines, penalties and even utilities are then based upon statistics instead of the wisdom of Solomon. An already vast digital divide easily becomes even larger as a result of systematic, orchestrated incidences of internet throttling and blackouts. Land of the free will no longer look that way once access to vital resources are no longer analog. Then again, how will the land look at all if event mapping models are enacted that regulate who can live where. All leading to an unstable, unsustainable system that results in civil unrest. From within there is an erosion of our society, our civility and worse still..our humanity. Probably how life was on Mars right before they launched to Earth and began the War of the Worlds.

If the Greatest Story Ever Told comes to an end, then may these words be a testament to whatever follows the Age of Man. AI, was just another tool, another toy of human design. And as wonderfully capable as it was of solving our problems and answering our questions, there was one thing it could never be.

The greatest creation known to man?!

Leave a comment